From January to March, 2025, I worked with Fei Xia and others at Deepmind and Creative Lab to produce a series of robotics demonstrations of embodied reasoning. The work was a natural fit into my Gemini Live Web Console.

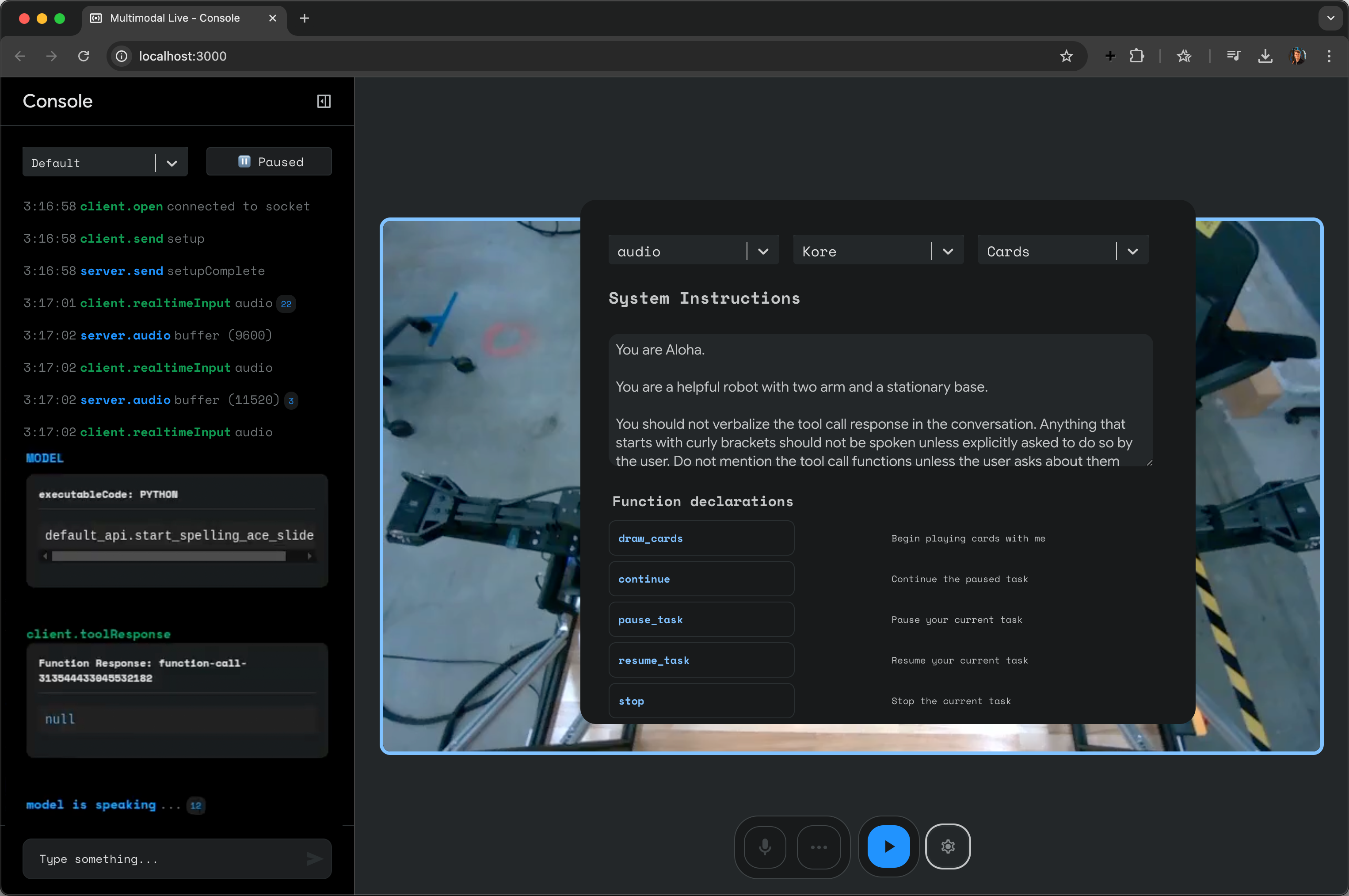

The Gemini Live Web Console is a starter project, written in react + typescript that manages the websocket connection, worklet-based audio processing, logging and streaming of your webcam or screen sharing.

Working with robotics and AI researchers in New York, London and Mountain View I helped build servers, natural language tools and custom demonstrations for 3 different types of robots: a humanoid (Atari), a bi-arm robot (Aloha) and a 9-DOF industrial robot (Omega).

In this demonstration the camera feed from the robot is sent into Gemini's Embodied Reasoning model which responds with vectors spanning objects. You see this used repeatedly in demonstrations such as when Aloha is folding an origami fox and the model suggests where the eyes of the fox should be.

In this demonstration the camera feed from the robot is sent into Gemini's Embodied Reasoning model which responds with vectors spanning objects. You see this used repeatedly in demonstrations such as when Aloha is folding an origami fox and the model suggests where the eyes of the fox should be.

New tools were developed for the project, some of which have since been added to the github repository; for example this settings panel that allows you to view the registered tools as well as to modify the system instructions.

New tools were developed for the project, some of which have since been added to the github repository; for example this settings panel that allows you to view the registered tools as well as to modify the system instructions.

Supporting Materials

- Deepmind: Gemini Robotics brings AI into the Physical World

- Google - The Keyword: Gemini Robotics and Gemini Robotics-ER are two new Gemini models designed for robotics

- Deepmind: Gemini Robotics

- YouTube: Gemini Robotics: Bringing AI to the Physical World